nsZip - NSP compressor/decompressor to reduce storage

nsZip is an open source PC tool to lossless compress/decompress NSP files in order to save a lot of storage while all NCA files keep their exact same hash. [prebreak]1[/prebreak]

But it’s more than just a compression. A lot of data like NDV0 partitions or fragment NCA3 files will be removed while compressing and exactly recreated when decompressed which saves even more space especially on updates higher than v65536.

In addition, the NSZ format was designed with emulators in mind so adding NSZ support to Yuzu will be possible in the future and because NSZ contains decrypted NCAs no keys would be needed to only extract game files. As compression algorithm Zstandard is used to multithreaded compress 256 KB chunks while uncompressible chunks are stored uncompressed. That way NSPZ/XCIZ allows random read access. Zstandard has a 43MB/s compression and 7032MB/s decompression speed on an 8 threaded CPU at level 18 while having one of the best compression ratios compared to other compression algorithms.

How compressing works: NSP => extracted NSP => decrypted NCAs => trim fragments => compressing to NSZ => verify correctness => repacking NSPZ file

How decompressing works: NSPZ file => extracted NSPZ => decompress NSZ => untrim fragments => encrypt NCAs => verify correctness => repack as NSP

Check out my GitHub page to report bugs, follow this project, post your suggestions or contribute.

GitHub link: https://github.com/nicoboss/nsZip/releases

For the new homebrew compatible NSZ format please see https://github.com/nicoboss/nsz

NSZ thread: https://gbatemp.net/threads/nsz-homebrew-compatible-nsp-xci-compressor-decompressor.550556/

Until NSZ support is implemented in nsZip I don't see why you want to use it instead of NSZ

Block compressed NSZ is very similar to the beautiful NSPZ format just without all the unnecessarily complexity which made NSPZ unfeasible for other software to implement. I might remove NSPZ/XCIZ support in the future if I see that there's no point in keeping it to not confuse people.

Differences between NSZ and NSPZ:

NSPZ/XCIZ:

- GitHub Project: https://github.com/nicoboss/nsZip

- Always uses Block compression allowing random read access to play compressed games in the future

- Decrypts the whole NCA

- Trims NDV0 fragments to their header and reconstructs them

- Only supported by nsZip and unfortunately doesn't really have a future

NSZ/XCZ:

- GitHub Project: https://github.com/nicoboss/nsz

- Uses solid compression by default. Block compression can be enabled using the -B option. Block compression will be the default for XCZ

- Decrypts all sections while keeping the first 0x4000 bytes encrypted. Puts informations needed to encrypt inside the header.

- Deleted NDV0 fragments as they have no use for end users as they only exist to save CDN bandwidth

- Already widely used. Supported by Tinfoil, SX Installer v3.0.0 and probably a lot of other software in the future

nsZip v2.0.0 preview 2:

Q: It prod.keys not found!

A: Dump them using Lockpick and copy them to %userprofile%/.switch/prod.keys

Q: How much storage does this save?

A: It depends on the game. The compressed size is usually around 40-80% of the original size.

Q: What about XCI?

A: Compression to XCIZ is supported, but XCIZ to XCI recreation is still in development.

Q: The program throws an error or seems to behaves not as intended!

A: Open an issue on my GitHub page where you give me exact steps how to reproduce.

Q: Does this compress installed games?

A: Not now but it's planned for the far future.

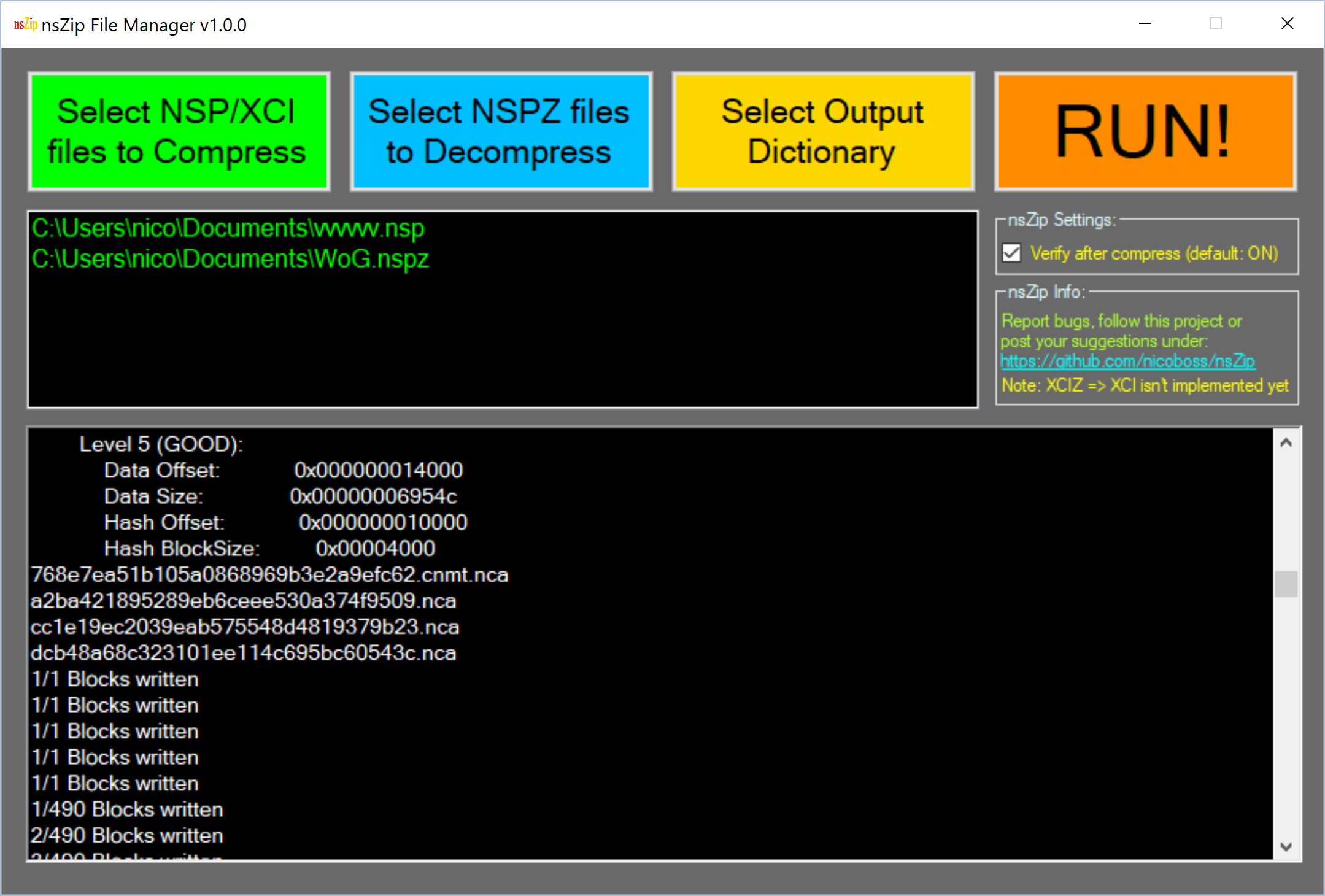

Screenshot:

But it’s more than just a compression. A lot of data like NDV0 partitions or fragment NCA3 files will be removed while compressing and exactly recreated when decompressed which saves even more space especially on updates higher than v65536.

In addition, the NSZ format was designed with emulators in mind so adding NSZ support to Yuzu will be possible in the future and because NSZ contains decrypted NCAs no keys would be needed to only extract game files. As compression algorithm Zstandard is used to multithreaded compress 256 KB chunks while uncompressible chunks are stored uncompressed. That way NSPZ/XCIZ allows random read access. Zstandard has a 43MB/s compression and 7032MB/s decompression speed on an 8 threaded CPU at level 18 while having one of the best compression ratios compared to other compression algorithms.

How compressing works: NSP => extracted NSP => decrypted NCAs => trim fragments => compressing to NSZ => verify correctness => repacking NSPZ file

How decompressing works: NSPZ file => extracted NSPZ => decompress NSZ => untrim fragments => encrypt NCAs => verify correctness => repack as NSP

Check out my GitHub page to report bugs, follow this project, post your suggestions or contribute.

GitHub link: https://github.com/nicoboss/nsZip/releases

For the new homebrew compatible NSZ format please see https://github.com/nicoboss/nsz

NSZ thread: https://gbatemp.net/threads/nsz-homebrew-compatible-nsp-xci-compressor-decompressor.550556/

Until NSZ support is implemented in nsZip I don't see why you want to use it instead of NSZ

Block compressed NSZ is very similar to the beautiful NSPZ format just without all the unnecessarily complexity which made NSPZ unfeasible for other software to implement. I might remove NSPZ/XCIZ support in the future if I see that there's no point in keeping it to not confuse people.

Differences between NSZ and NSPZ:

NSPZ/XCIZ:

- GitHub Project: https://github.com/nicoboss/nsZip

- Always uses Block compression allowing random read access to play compressed games in the future

- Decrypts the whole NCA

- Trims NDV0 fragments to their header and reconstructs them

- Only supported by nsZip and unfortunately doesn't really have a future

NSZ/XCZ:

- GitHub Project: https://github.com/nicoboss/nsz

- Uses solid compression by default. Block compression can be enabled using the -B option. Block compression will be the default for XCZ

- Decrypts all sections while keeping the first 0x4000 bytes encrypted. Puts informations needed to encrypt inside the header.

- Deleted NDV0 fragments as they have no use for end users as they only exist to save CDN bandwidth

- Already widely used. Supported by Tinfoil, SX Installer v3.0.0 and probably a lot of other software in the future

nsZip v2.0.0 preview 2:

- Fixed XCI to XCIZ compression

- New UI made using WPF instead of WinForms

- Huge performance improvements

- Directly decrypt the NSP without extracting it first

- Directly decompressing NSPZ files without extracting them first

- Huge encrypting speed increase

- Huge SHA-256 verification speed increase

- Enhanced compressing multithreading

- Support for up to 400 CPU Cores

- Improved game compatibility

- Command Line support

- More settings

- Added support for over multiple NCAs splitted NDV0 fragment trimming

- Let the user continue when detecting a yet unimplemented multifile NDV0 fragment. I'll add proper support for that unimplemented multifile NDV0 fragment format in the following days.

- The compression level can now be changed (tradeoff between speed and compression ratio)

- The block size can now be changed (tradeoff between random access time and compression ratio)

- NSZ implementation

Q: It prod.keys not found!

A: Dump them using Lockpick and copy them to %userprofile%/.switch/prod.keys

Q: How much storage does this save?

A: It depends on the game. The compressed size is usually around 40-80% of the original size.

Q: What about XCI?

A: Compression to XCIZ is supported, but XCIZ to XCI recreation is still in development.

Q: The program throws an error or seems to behaves not as intended!

A: Open an issue on my GitHub page where you give me exact steps how to reproduce.

Q: Does this compress installed games?

A: Not now but it's planned for the far future.

Screenshot:

Last edited by nicoboss,

. Saves on time, soap, water and money having to wash them.

. Saves on time, soap, water and money having to wash them.